The automatic analysis of facial expressions is motivated by the essential role that the face plays in our emotional and social life. Facial expression is one of the most convincing and natural means that human beings have to communicate our emotions, intentions, clarify and emphasise what we say. Furthermore, unlike other non-verbal channels, facial expressions are cross-cultural and universal, not depending on the ethnicity, age and gender of the individual.

In the context of an interview or interrogation, the analysis of facial expressions can provide invaluable support to the observer. The spotter can assess, for example, in what moments they occur in relation to the question posed: when listening to it, while processing that information; when answering, after having given the answer. It is also interesting for the detection of emotional incongruities, that is, situations in which the subject verbally expresses an emotion by showing a very different one on the face. Likewise, the direction of the gaze and the orientation of the head over time translate the degree of attention of the interviewee, giving clues about their interest, abilities and certain personality traits.

Herta explains how facial expression analysis works?

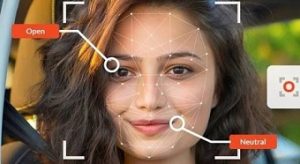

The system, for example the Bioobserver, analyses the face frame by frame, either from a pre-recorded video or from a camera capture in real time. It begins by detecting the presence and location of the face within the frame.

Next, it extracts a series of characteristic points of the face (for example, around the eyes, eyebrows, nose and mouth). Finally, the basic emotions, micro-expressions and behavioural metrics are extracted.

The classification algorithms are based on Deep Learning, an advanced Artificial Intelligence technique that uses deep neural networks. These algorithms are capable of automatically extracting the most relevant information from the face, such as patterns and textures (for example, presence of wrinkles around the eyes, shape of the mouth, etc.). Our system has been trained with an extensive database of millions of images of subjects of different ages, genders and ethnicities. This allows Bioobserver to ensure robust and universal behaviour, with very high hit rates.

The field of Psychology considers that human beings have a reduced number of basic emotions, from which our entire effective range is built. These emotions are innate, and their corresponding facial expressions are universally recognised.

The 7 most used categories of basic emotions are those proposed by the psychologist Paul Ekman: “joy”, “sadness”, “fear”, “anger”, “aversion”, “surprise” and “neutral”.

The human face contains more than 43 muscles. Facial micro-expressions are the result of the activation of one or more of them. These are involuntary gestures, which last a twentieth of a second, and can reveal the state of mind that we want to hide. They are reactions that do not go unnoticed by a well-trained eye, but are almost imperceptible to non-expert observers. Paul Ekman’s Facial Action Coding System (FACS) lists all the micro-expressions or Action Units (AUs) that can occur on the face.

Bioobserver allows the user to define a custom list of events that are considered of interest (for example, the start / end of a key question), and annotate them in real time on the video. Said annotations can be viewed and exported to a file together with the rest of the information extracted (micro-expressions, basic emotions, valence / activation, gaze / head orientation). In this way, the tool allows establishing relationships between events and the individual’s behaviour.